Overview:

The primary objective of this project is to seamlessly integrate with the Exodus platform and leverage its robust API to establish connections with a diverse range of applications and services. This integration will unlock a multitude of opportunities for enhanced functionality, data sharing, and automation across various platforms. In this document, we will delve into the comprehensive details of this ambitious integration project.

Project Scope:

The project scope encompasses several key aspects, including but not limited to:

-

API Integration: We will work closely with the Exodus platform’s API to establish secure and reliable connections. This will involve a deep understanding of the API endpoints, authentication mechanisms, and data formats.

-

Application Compatibility: Our integration efforts will ensure compatibility with a wide array of applications and services, spanning different industries and use cases. This includes popular software suites, IoT devices, cloud services, and more.

-

Data Exchange: The project will facilitate the seamless exchange of data between the Exodus platform and connected applications. This will enable real-time data sharing, synchronization, and analytics.

-

Functionality Enhancement: By integrating with the Exodus platform, we aim to enhance the functionality of connected applications. This may involve adding features such as automated data backups, secure file transfers, and multi-platform notifications.

-

Security and Compliance: Security is paramount in this project. We will implement robust security measures to protect data during transit and at rest. Compliance with data privacy regulations, such as GDPR and HIPAA, will be a key consideration.

-

Scalability: The integration architecture will be designed to accommodate future growth and scalability. This ensures that additional applications and services can be seamlessly added to the ecosystem as the need arises.

Project Timeline:

The timeline for this project will be divided into several phases, including:

-

Planning and Analysis: In this initial phase, we will conduct a thorough analysis of the Exodus API and identify the integration requirements.

-

Development: The development phase will involve building the necessary connectors and middleware to enable communication between the Exodus platform and various applications.

-

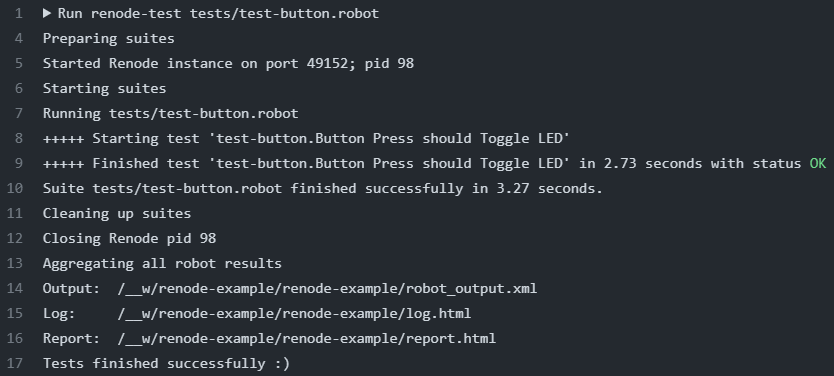

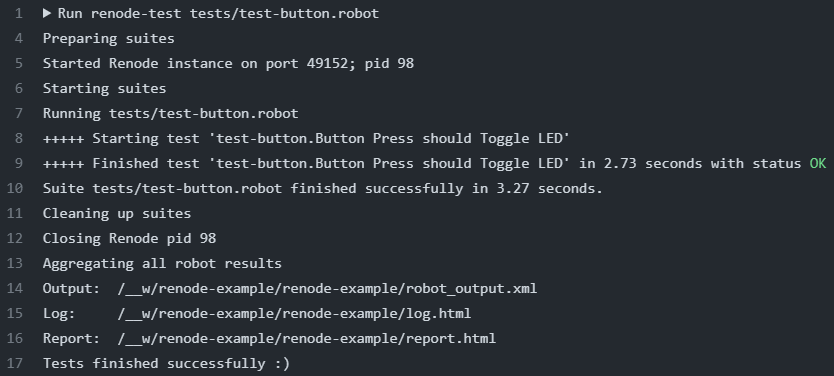

Testing: Rigorous testing will be conducted to ensure the reliability, security, and performance of the integration.

-

Deployment: Once testing is successful, the integration will be deployed, and initial connections with select applications will be established.

-

Optimization: Continuous optimization and refinement will be carried out to enhance the integration’s efficiency and effectiveness.

-

Scaling and Maintenance: As more applications are integrated, the system will be regularly maintained and scaled to meet growing demands.

To run your project, make sure you have the following requirements:

- Python 3.6+

- Flask framework

- Exodus API access (API keys and access permissions)

- …

To run and develop your project, follow these steps:

- Clone the project from this repository:

git clone https://github.com/yourusername/yourproject.git

- Navigate to the project folder:

cd yourproject

- Install the required dependencies:

pip install -r requirements.txt

- Set up the configuration file and add your API keys:

cp config.example.ini config.ini

- Start the application:

python app.py

- Open your browser and go to

http://localhost:5000to begin using the application.

Provide detailed instructions on how to use your project. Explain the steps users should follow to gain API access and make integrations.

Project Team:

To successfully execute this project, a dedicated team with diverse skills will be assembled. This team may include:

- Project Manager – Cole Chandler

- Software Developers – Jose West, Melvin Quinn

- API Specialists – Lloyd Barton, Kyran Gibbs

- Security Experts – Ajay Ayala, Lloyd Barton

Project Benefits:

The successful integration with the Exodus platform will bring numerous benefits, including:

- Streamlined data sharing and automation across various applications.

- Improved productivity and efficiency for users of connected applications.

- Enhanced data security and compliance with industry regulations.

- Scalability to accommodate future growth and new applications.

- Potential for revenue generation through premium features and services.

For more information on using the Exodus API, refer to the API documentation.

If you’d like to contribute to this project, please follow these steps:

- Fork this project.

- Create a new branch:

git checkout -b feature/your-feature-name - Commit your changes:

git commit -m 'Add new feature' - Publish your changes in your fork:

git push origin feature/your-feature-name - Open a pull request.

Conclusion:

The integration with the Exodus platform represents a significant opportunity to create a robust and interconnected ecosystem of applications and services. This project will require careful planning, technical expertise, and a commitment to delivering a secure and reliable integration solution. As we embark on this journey, we anticipate unlocking new possibilities and delivering substantial value to our users and partners.

Open source code is a fundamental pillar of our project’s success, especially in our integration with the Exodus platform. It embodies our dedication to transparency, collaboration, and innovation. In this discussion, we will delve into the significance of open source code and how it benefits both our project and the wider developer community.

Transparency and Accountability:

Open source code brings unparalleled transparency to our project. By providing public access to our source code, we establish trust with users, partners, and stakeholders. Anyone can review the code to ensure it adheres to best practices, security standards, and ethical guidelines. This transparency holds us accountable for the quality and integrity of our integration solution.

Community Collaboration:

The essence of open source projects is community collaboration. By open-sourcing our code, we invite developers from around the world to contribute their expertise, ideas, and enhancements. This collective effort accelerates development, resolves bugs, and introduces innovative features.

Knowledge Sharing:

Openly sharing code promotes knowledge exchange. Developers can gain insights into integration strategies, API interactions, and best practices by studying our codebase. This educational facet of open source benefits both seasoned developers and those looking to enhance their skills.

Flexibility and Customization:

Open source code empowers users to tailor the integration to their specific needs. They can modify the codebase to seamlessly integrate with their unique set of applications and services, thus ensuring the solution aligns precisely with their requirements.

Cost-Efficiency:

Open source code can dramatically reduce development costs. Leveraging existing open source libraries, frameworks, and components expedites development while keeping expenses in check. This cost-effectiveness is particularly valuable for projects operating within limited budgets.

Long-Term Sustainability:

Open source code offers the promise of long-term sustainability. Even if the original development team evolves or disbands, the open source community can continue to maintain and enhance the codebase. This guarantees the integration remains viable and up-to-date.

Licensing and Legal Compliance:

When sharing code as open source, selecting an appropriate open source license is crucial. This license clarifies how the code can be used, modified, and distributed, ensuring legal compliance while safeguarding intellectual property rights.

In conclusion, embracing open source code as a fundamental aspect of our integration project with the Exodus platform aligns seamlessly with our commitment to transparency, collaboration, and innovation. By doing so, we not only elevate the quality and sustainability of our integration but also contribute significantly to the broader developer community. This fosters a culture of shared knowledge and progress. Open source code stands as a cornerstone of our project’s success, with a positive impact extending throughout the technology landscape.

This project is licensed under the Project License. For more details, check the license file.

https://github.com/automiation63y/Vexor-Exodus-Wallet-Integrations-API-Usage-Web3-WalletConnect